Students using AI: When is it a tool and when is it cheating?

A cool new article by Tu et al. made me wonder about the future of AI in data science and education.

I was fortunate to take graduate-level statistics from the person I consider the best teacher I’ve ever encountered: Greg Hancock. I can’t tell you how many times his clear and compelling explanations of abstract statistical concepts left me, and the rest of the class, completely agog. I can remember him doing all the cross-products for an ANOVA, on the blackboard, manually, to show us how the math worked. It was bananas. Then he had us do it! And that understanding of the math really helped when, eventually, he moved us to using statistical software that could do the math for us. And then, on his tests, he’d give us actual stat software output with key values whited-out. We’d have to use the other information to calculate the values to fill in, to show that we understood the math and concepts underlying the analyses. He helped us see the software as a tool to do the grunt work, rather than something you poke at until you get output that looks good. The software was a tool that freed us up to do the conceptual thinking that truly defined our research. Amazing stuff.

A new paper on AI and data science education, by Tu and colleagues (h/t to Ethan Mollick for sharing the paper on LinkedIn), reminded me of Greg’s classes. Among other things, they argued that AI could replace a lot of the basic work in data science (e.g., data cleaning, data exploration, developing prediction models, creating figures), freeing data scientists up to do the conceptual thinking and planning. They argued AI could change humans’ role from data scientist to product manager. Kind of like how stat software freed me up from being a mathematics workhorse to a…well…a data scientist.

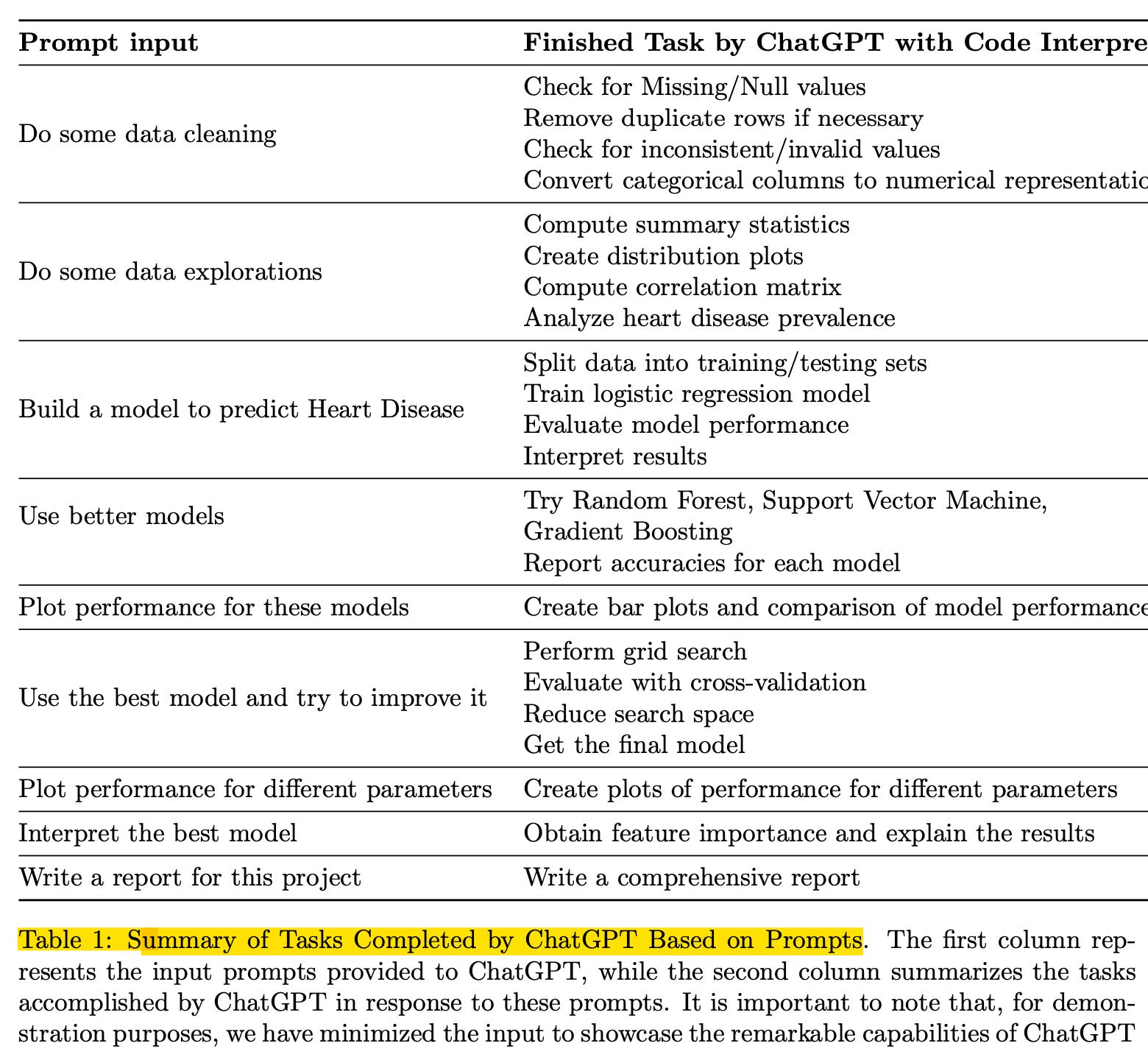

I have to say, I was pretty stunned at what they got ChatGPT to do with very little prompting. I mean, look at this:

Yowza. So, if a student uses ChatGPT to go right from a dataset to a full analysis with a report, is that cheating? Or is it a tool, like statistical software? Of course, it all depends upon what the instructor’s learning objectives and assessments are, but this paper did make me think. It might not be too long before we ask students to do the steps in the left-hand column of Table 1 just a few times, to make sure they understand them (just like Greg asked us to do cross-products ourselves once or twice), before allowing them to have AI do it for them in the future, just as statistical software does for me now. I don’t think that would be cheating, but I do think students better be able to tell when ChatGPT gets things wrong - and that will require doing cross-products, etc. on their own, at least a few times.